Decision Trees: An Intuitive Approach with Scikit-Learn in Python

Decision trees are powerful and intuitive machine learning algorithms that mimic a tree-like decision-making process. This methodology resembles how decisions are made in real-life scenarios, beginning from a single root and branching out based on conditions until a decision (or leaf) is reached.

In this guide, we’ll walk through the process of building a decision tree using the renowned Scikit-Learn library in Python, a go-to choice for many data science practitioners. While there are various libraries like TensorFlow available for machine learning, Scikit-Learn remains a popular choice for its simplicity and efficiency.

Understanding Classifiers

Picture this: You’re tasked with creating a software that can determine if an image portrays a male or female. Manually coding rules for such a task can be exceedingly complex. And if the categories change? You’d be rewriting rules incessantly. Enter Machine Learning—a more elegant solution.

Instead of hardcoding every possible condition, machine learning allows us to use algorithms, referred to as classifiers, to infer these rules. A classifier essentially processes input data and predicts a category or label for that data. For instance, given an image of an individual, the classifier would ascertain whether it depicts a male or female.

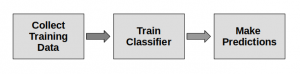

The typical flow with classifiers involves:

- Gathering data

- Training the classifier with this data

- Making predictions using the trained classifier

For our decision tree classifier, we’ll be feeding it labeled data, a process known as supervised learning. Though our example utilizes simple data arrays, real-world applications generally require substantial datasets for accurate predictions.

Visualizing Decision Trees

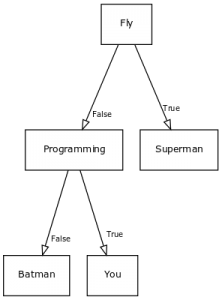

At every juncture or node in a decision tree, there’s a choice—turning left or right. These decisions are determined by input conditions, and as you traverse the tree based on these conditions, you ultimately arrive at an outcome or leaf.

A computer navigates this tree based on numerical input. For instance, given inputs like [False, True], it might predict ‘You’.

Setting up Scikit-Learn

To begin, ensure you have Scikit-Learn (sklearn) installed. If not, you can install it using pip:

sudo pip install sklearn |

Additionally, it’s essential to have scipy installed:

sudo pip install scipy |

Crafting a Decision Tree

First, let’s import the necessary module and initialize our decision tree model:

from sklearn import tree |

Next, let’s set up our training data:

# Features: [height, hair-length, voice-pitch] |

Now, let’s train the classifier and make a prediction:

from sklearn import tree |

To delve deeper and get hands-on with more examples, feel free to download additional examples here.