In this article you’ll learn about Neural Networks. What is a neural network? The human brain can be seen as a neural network —an interconnected web of neurons .

In Machine Learning, there exist an algorithm known as an Aritifical Neural Network. They are artificial in the sense that they mimic biological neural networks. It’s not a real neural network but only a computer model of it.

However, it like a real neural network (brain), the artificial model can learn from experience (data). A neural network always starts with a single unit: the perceptron.

Related course: Complete Machine Learning Course with Python

Introduction

A perceptron

Lets talk about neural network. Like any network, it’s made out of entities. One of such entitites is a perceptron. A single perceptron is the basis of a neural network.

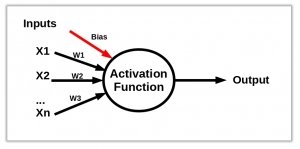

A perceptron has:

- one or more inputs

- a bias

- an activation function

- a single output

A perceptron receives inputs. It then multiplies them by some weight. Then they are send into an activation function to produce an output. We also add a bias value, it allows you to shift the activation function.

Network Layers

A neural network is created by adding layers of perceptrons together: the multi-layer perceptron (MLP) algorithm.

We’ll have several layers:

| Layer | Purpose |

|---|---|

| Input layer | Your data |

| Hidden layer | Layers between input and output layer |

| Output Layers | Results |

Graphically that looks like this (source: Wikipedia):

Using the sklearn machine learning module, you can create a perceptron with one line of code:

clf = Perceptron(tol=1e-3, random_state=0) |

The same is true for creating a neural network, the module sklearn has existing implementation for both.

Of course, you need to load the sklearn module and have some data. After training the Perceptron (the fit method), you can calculate it’s score or do predictions.

from sklearn.datasets import load_digits |

Example Neural Network

Preparing the data

Lets make a classifier for the Iris data set. This is a standard dataset that is used for Machine Learning algorithms, but we’ll now use it for Deep Learning (Neural Networks).

The consists of measurements of flowers (‘sepal-length’, ‘sepal-width’, ‘petal-length’, ‘petal-width’) and a class for every measurements.

First load the dataset (it’s a csv file).

import pandas as pd |

Because the measurements are in the first 4 columns, we’ll take only those (the 5th column is the class or type of flower). We’ll store all the measurmenets in variable X and all the flower classes in variable Y.

X = irisdata.iloc[:, 0:4] |

Variable y contains categorical values, but computers work better with numbers. The contents of variable y now is:

Class

0 Iris-setosa

1 Iris-setosa

2 Iris-setosa

3 Iris-setosa

4 Iris-setosa

.. ...

145 Iris-virginica

146 Iris-virginica

147 Iris-virginica

148 Iris-virginica

149 Iris-virginica

[150 rows x 1 columns]

You can convert y from categorial variables (strings) to numerical values with sklearn easily:

from sklearn import preprocessing |

Variable y is then numerical:

Class

0 0

1 0

2 0

3 0

4 0

.. ...

145 2

146 2

147 2

148 2

149 2

[150 rows x 1 columns]

Then before making predictions, make sure the numbers are uniformly evaluated. This is called feature scaling.

You can do that with a few lines of code:

# Feature scaling |

Related course: Complete Machine Learning Course with Python

Predicting

Lets get to the cool part: prediction.

Then you can load the neural network and train it:

from sklearn.neural_network import MLPClassifier |

We created the neural network with this line:

mlp = MLPClassifier(hidden_layer_sizes=(10, 10, 10), max_iter=1000) |

where the first parameter is the hidden_layer_sizes, the layer between input and output. The variable max_iter defines the number of iterations (training weights).

Then you can use the neural network (mlp) to make predictions for new flowers.

Given some measurements, it will predict the class it belongs to.

predictions = mlp.predict( [[5,3,1,0.2]] ) |

predictions = mlp.predict( [[6,5,6,1.2]] ) |

Example

You can copy and paste the example below. It creates a neural network that predicts the type of flower (classifier) for new types of measurements.

import pandas as pd |

Related course: Complete Machine Learning Course with Python